Currently Empty: 0.00 €

AI/ML

Revolutionizing AI/ML: Edgecore’s AGS8200 & Intel® Habana® Gaudi® 2’s Breakthrough

In the rapidly changing digital transformation landscape, where breakthroughs continually push the boundaries of what’s possible, the integration of advanced networking solutions with exceptional AI/ML computational capabilities signals the beginning of a new era. My previous discussion, “Networking Unleashed: Edgecore’s New 800G Tomahawk 5 Switch & SONiC – Elevating AI Beyond GPUs,” established a foundation for understanding the critical role of the latest networking technologies in advancing artificial intelligence applications. Now, we explore further with the introduction of the Edgecore AGS8200—a top-tier GPU-based server designed to not only complement but significantly improve this ecosystem.

As we navigate through the era of AI/ML, it becomes evident that the infrastructure underpinning these technologies must evolve in lockstep. The introduction of Edgecore’s 800G Tomahawk 5 switches with Enterprise SONiC support marked a monumental leap forward, offering the bandwidth and speed necessary to manage the massive data flows inherent in AI/ML processes. However, to truly harness the potential of such advanced networking capabilities, sophisticated computing power is essential. Enter the Edgecore AGS8200, a server that epitomizes the zenith of GPU-based computing, engineered specifically for the rigors of AI/ML applications. Equipped with Intel® Habana® Gaudi® 2 processors, this server stands as a beacon of AI/ML computing evolution, offering unparalleled performance, efficiency, and scalability.

A Harmonious Integration: 800G Networking and AI Servers

The synergy between Edgecore’s Open Networking switches and the AGS8200 GPU-based server is profound. Together, they form an integrated infrastructure that transcends the sum of its parts, creating an ecosystem that significantly amplifies efficiency and capability. The high-speed, high-capacity networking enabled by the 400G / 800G systems ensures swift and efficient data movement across networks – a critical component in AI/ML applications where latency can spell the difference between success and failure. Paired with the computational might of the AGS8200, organizations are equipped with a formidable toolkit to tackle the most challenging AI/ML workloads, from deep learning model training to complex data analysis.

The launch of the Edgecore AGS8200 marks a pivotal moment in the landscape of GPU-based servers, especially for artificial intelligence (AI) and machine learning (ML) applications. This breakthrough is not just a step forward; it represents a quantum leap in computing, fueled by the spirit of open networking and the virtues of disaggregation.

Reflecting on My Journey with Edgecore Networks: From Edge to Core, and Beyond

Reflecting on my tenure at Edgecore Networks, I’ve had the privilege of witnessing the cutting edge of technological evolution from a unique vantage point. Immersed in the Team’s unwavering dedication and foresight, the experience of seeing visionary projects come to fruition has been exhilarating. The launch of the Intel® Habana® Gaudi® 2 processors mark a significant milestone in this journey, representing a leap forward in processing power and efficiency that we had eagerly anticipated. With the introduction of the AGS8200, Edgecore Networks has now completed its comprehensive suite of open networking solutions, ranging from advanced 400G and 800G AI Switches with Enterprise SONiC support to pioneering AI Open Servers. This evolution underscores our mantra, “From Edge to Core,” a guiding principle that has steered our innovation from high-capacity network switches to the advent of AI-optimized servers.

As a former member of Edgecore Networks, I’ve witnessed the evolution of our ‘From Edge to Core’ philosophy to encompass ‘From AI Switches to AI Servers,’ reflecting our aim to excel throughout the networking and computing landscape. This expansion not only signifies our drive to dominate both networking and computational domains but also our commitment to forging an integrated ecosystem where every element, from the network’s edge to the core of computational power, is fine-tuned for AI/ML computing demands.

Looking back from where I stand today, outside yet deeply connected to Edgecore Networks, this journey from the edge to the core, and into the sphere of AI servers, represents a poignant chapter of my professional life. It’s a testament to our shared belief in the transformative potential of open networking, our dedication to advancing AI/ML computing, and our collective vision for a future where the full capabilities of artificial intelligence are not just realized but are continuously shaped by the innovations we pioneered. This narrative, “From Edge to Core,” from AI Switches to AI Servers, encapsulates not just a technological progression but a personal and professional journey that continues to inspire and drive me forward, even as I embark on new adventures beyond Edgecore Networks.

Edgecore AGS8200: A Closer Look

In a realm where the pace of innovation is only eclipsed by the speed of thought itself, the Edgecore AGS8200 stands as a monument to human ingenuity and the relentless pursuit of computational excellence. This GPU-based server, infused with the raw power of Intel® Habana® Gaudi® 2 processors and dual Xeon® Sapphire-Rapids processors, is a titan forged to conquer the vast and uncharted territories of AI/ML applications.

System Configuration:

• Form Factor: 8U, 90 x 44,7 x 35,2 cm

• Processor Support: Sapphire Rapids, 2 Sockets Intel® Xeon® Platinum 8454H, 32c, 64 threads, 82.5MB. Scalable processors, offering a balance of computing power, energy efficiency, and AI acceleration capabilities.

• Memory: Up to 2TB 16x DDR5 memory slots per CPU, ensuring ample capacity and speed for demanding AI/ML workloads.

• Storage Options:

o Front Bays: 16 x HDD/SSD+ 8 x NVMe or 8 x HDD/SSD+ 16 x NVMe, providing versatile and high-speed storage solutions.

o M.2 Slots: 2x M.2 slots, ideal for fast system boot and application loading.

• Expansion Slots:

o 1 x OCP 3.0

o 8 x PCIe Slots

o 8 x NIC Slots

with of course GPU Support:

• GPU Configuration: 8 x OAM (Intel Habana HL-225H/C) sweet!

Plus, what we Networking Freaks always need to know 😉 Scale-Out Interfaces:

• 24 x 100G RoCE ports integrated into every Gaudi® 2

• 6 x 400G QSFP-DD to your Leafs!

Additional Cooling:

• Cooling Design: Advanced cooling system with redundant, 14+1 hot-swappable fans and optimized airflow design to ensure stable operation under heavy computational loads, maintaining performance integrity of GPUs and CPUs.

And Power Supply:

• System: 1+1 CRPS 2700 W redundant/hot-swappable AC/DC

• GPU: 3+3 CRPS 3000 W redundant/hot-swappable AC/DC

The Power of Intel® Gaudi® 2

Intel® Gaudi® 2 key benefits

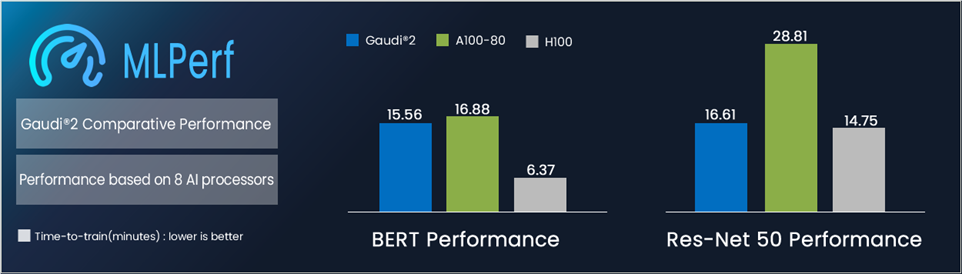

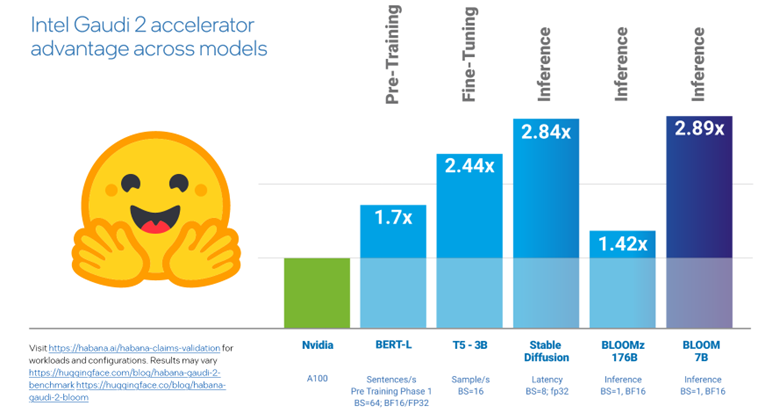

- With BF16 software, Intel® Gaudi® 2 could outperform its competitors.

- Intel® Gaudi® 2 earns 2x better performance than its competitor.

- Intel® Gaudi® 2 is the only viable alternative to N brand GPU for LLM (Large Language Models) training and inferencing.

- On the MLPerf Benchmark, Intel® Gaudi® 2 is one of only two GPUs used for GPT-3 training.

- With FP8 software, Intel® Gaudi® 2 is expected to have a better price-performance ratio than its competitor.

What Is MLPerf?

MLPerf™ benchmarks developed by MLCommons, a consortium of AI leaders from academia, research labs, and industry are designed to provide unbiased evaluations of training and inference performance for hardware, software, and services. They’re all conducted under prescribed conditions. To stay on the cutting edge of industry trends, MLPerf continues to evolve, holding new tests at regular intervals and adding new workloads that represent the state of the art in AI.

In addition to the MLPerf industry benchmark, Intel Gaudi 2 AI accelerator scores on other third-party evaluations.

A Performance Per Dollar Paradigm Shift

The comparison between Intel Gaudi2 and NVIDIA’s H100, as detailed by ServeTheHome, brings to light a critical metric in today’s computing landscape: performance per dollar. The Intel Gaudi2, integral to the AGS8200’s architecture, emerges as a formidable force, boasting up to 4 times better performance per dollar than its NVIDIA counterpart. This isn’t just a marginal improvement; it’s a quantum leap in value that democratizes high-performance AI/ML computing, making it accessible to a broader spectrum of users and applications.

Why Performance Per Dollar Matters?

In the domain of AI/ML, where computational demands are incessantly escalating, the efficiency of investment becomes paramount. Organizations are constantly balancing the scales between computational needs and budgetary constraints. The AGS8200’s superior performance per dollar ratio ensures that investments translate directly into tangible outcomes, maximizing the impact of every dollar spent on AI/ML infrastructure.

- Cost-Efficient Scaling: As AI/ML models grow in complexity, the AGS8200 allows for scalable solutions that do not compromise on performance or financial efficiency. This scalability is crucial for startups and established enterprises alike, ensuring that cutting-edge AI/ML capabilities are within reach without exorbitant costs.

- Enhanced Research and Development: The financial efficiency of the AGS8200 frees up resources for further innovation and research within AI/ML fields. Institutions can allocate saved funds towards advancing the state of the art in AI, fostering an environment ripe for breakthroughs.

- Broadened Access to AI/ML Technologies: By lowering the financial barrier to entry, the AGS8200 enables a wider array of organizations to harness the power of advanced AI/ML computing. This democratization of technology is pivotal in driving forward societal advancements and solving complex global challenges.

Harnessing Open Networking for Maximum Flexibility

The AGS8200 exemplifies the principles of open networking and disaggregation, surpassing basic performance and cost considerations. This strategy allows users to customize their computing infrastructure for precise requirements while promoting a culture of innovation and teamwork.

A New Epoch in AI/ML Computing

The Edgecore AGS8200, with its integration of Intel® Habana® Gaudi® 2 processors, does not merely offer an alternative to NVIDIA’s solutions; it heralds a new era in AI/ML computing. By significantly outperforming NVIDIA in terms of performance per dollar, the AGS8200 presents a compelling case for organizations striving to lead in the AI/ML arena. Coupled with the advantages of open networking, the AGS8200 stands as a beacon of innovation, accessibility, and efficiency.

In my journey with Edgecore Networks, witnessing the culmination of efforts into the AGS8200 has been both exhilarating and affirming. As we stand at the threshold of this new epoch, the AGS8200 is not just a server; it’s a gateway to unlocking the full potential of AI/ML applications, making the future of computing not just a vision, but a tangible, accessible reality. And you know what…? This is just a Beginig! 😉 Those one who know me in person could hear that a few times! 😊