Currently Empty: 0.00 €

Open Networking

Networking Unleashed: Edgecore’s New 800G Tomahawk 5 Switch & SONiC – Elevating AI Beyond GPUs

In a world captivated by the dazzling advancements in Artificial Intelligence and Machine Learning, the limelight often falls on the raw power of GPUs, the celebrated engines driving these technological marvels. However, beneath this surface of computational wizardry lies an unsung hero, quietly yet indispensably shaping the AI/ML landscape: Networking. As a fervent advocate of open networking, I’ve witnessed the transformative impact of this critical component, often overshadowed by its more glamorous counterparts.

Networking, in all its glory, forms the backbone of modern AI/ML applications. It’s a realm where every millisecond counts, and where a 10% improvement in performance can revolutionize outcomes. This is not just a theoretical claim; in the following sections, we’ll delve into compelling evidence of Ethernet’s triumph over InfiniBand – a notable 10% improvement in certain applications. It’s a testament to how networking is not just a supporting character to AI/ML but a central player in its unfolding saga.

My journey through the dynamic world of open networking has cemented my belief in its critical role in AI/ML. The advancements in networking technology, the nuances of data transfer efficiencies, and the relentless pursuit of faster, more reliable connections are not just engineering challenges; they are the catalysts propelling AI to new heights. This article is a narrative of this journey, a story that goes beyond GPUs and silicon to explore the very veins that sustain AI’s computational body – the intricate world of networking.

As we embark on this exploration, remember that every breakthrough in AI/ML is as much a triumph of networking as it is of processing power. The following chapters of this article will unravel the layers of this often-overlooked domain, illustrating why networking deserves its share of the spotlight in the AI revolution.

Innovations in Networking Hardware: Edgecore 800G Tomahawk 5

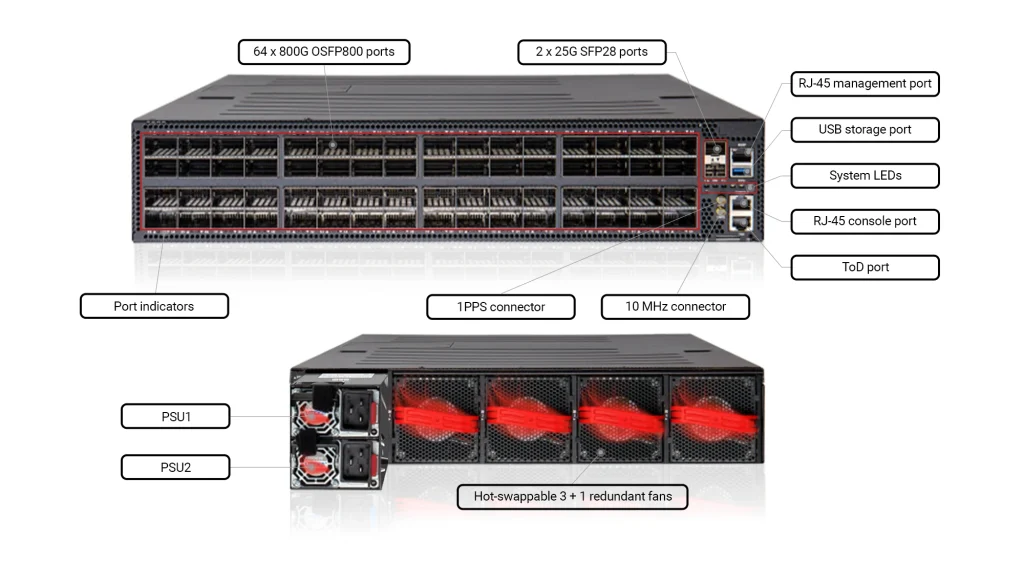

In the ever-evolving landscape of AI and ML, the hardware that forms the backbone of networking infrastructure must not only keep pace but also set the benchmark for innovation and performance. The Edgecore 800G Tomahawk 5 is a prime example of such pioneering technology, representing a significant leap in networking capabilities tailored for the demands of modern data centers and AI applications. Let’s unpack the features that make this hardware a game-changer in the field:

- Unprecedented Bandwidth Capacity: The Edgecore 800G Tomahawk 5 boasts an astonishing bandwidth capacity of 51.2 terabits per second. This immense bandwidth is critical in handling the huge data flows inherent in AI and ML applications, ensuring that data-intensive tasks are executed smoothly and efficiently.

- Advanced Port Technology: The series comes equipped with OSFP800 switch ports, which support various configurations. These include 1 x 800 GbE and, through breakout cables, options such as 2 x 400G GbE, 4 x 200 GbE, or 8 x 100 GbE. This flexibility in port configurations allows for tailored solutions to meet diverse data center needs, enhancing the adaptability of the network infrastructure.

- Innovative Chip Technology with Broadcom Tomahawk 5 Silicon: At its core, the Edgecore 800G Tomahawk 5 incorporates Broadcom’s latest Tomahawk 5 silicon. This cutting-edge chip technology delivers low latency and high radix. The 5nm process technology used in this chip not only boosts performance but also significantly enhances power efficiency – a critical factor in large-scale deployments.

- Advanced Networking Features: The hardware is equipped with state-of-the-art networking capabilities such as cognitive/adaptive routing and dynamic load balancing. These features enable dynamic adjustments based on network conditions, optimizing the overall efficiency of data flow and reducing latency. Furthermore, the advanced shared buffering and programmable in-band telemetry provide sophisticated on-chip applications for enhanced network insight and efficient management.

- Energy Efficiency and Sustainability: Given the energy-intensive nature of data centers, the energy efficiency of the Edgecore 800G Tomahawk 5 is a significant aspect. Its power-efficient design not only reduces operational costs but also aligns with the growing emphasis on sustainability in the tech industry.

Looking Ahead: Edgecore’s Integration of Jericho3-AI and Ramon3 Technologies

As impressive as the Edgecore 800G Tomahawk 5 is, the future of networking technology holds even more promise. As Edgecore integrates Broadcom’s Jericho3-AI and Ramon3 technologies into their upcoming systems, we will see a blend of intricate port configurations and advanced AI networking capabilities:

- Jericho3-AI Based Network Cloud Packet Forwarder (NCP):

- Switching Capacity and Ports: Featuring a 14.4 Tbps full-duplex switching capacity with 18x800G OSFP network interface ports and 20x800G OSFP fabric interface ports.

- Enhanced AI Networking Features:

- Perfect Load Balancing: Ensures even traffic distribution across all fabric links, maximizing network utilization.

- Congestion-Free Operation: End-to-end traffic scheduling for flow collision prevention and jitter elimination.

- Ultra-High Radix: Enables scalability to connect up to 32,000 GPUs, each with 800Gbps, in a single cluster.

- Zero-Impact Failover: Sub-10ns automatic path convergence for uninterrupted job completion.

- Ramon3-Based Network Cloud Fabric (NCF) Engine:

- High Switching Capacity: The Ramon3-based NCF engine supports a 51.2 Tbps switching capacity.

- Advanced Networking Technologies: Includes long-reach SerDes, distributed buffering, and advanced telemetry using standard Ethernet, enhancing network capability and analytics.

- Building Scalable AI/ML Clusters:

- Dual-Ramon3 System: This 6RU system with 128x800G (dual-51.2 Tbps) fabric port switch is designed for large-scale AI/ML clusters.

- Jericho3-AI System: The 2RU system with 18x800G (14.4 Tbps leaf) Ethernet network interface port switch enables the creation of extensive AI/ML clusters, offering significant scalability and flexibility.

Edgecore’s Vision for AI Networking

Edgecore’s integration of Broadcom’s Jericho3-AI and Ramon3 technologies heralds a new era in AI networking, combining high-capacity port configurations with advanced features optimized for AI workloads. These systems are not just designed to meet the demands of current AI applications but are also geared towards accommodating the future growth and complexity of AI networks. With this integration, Edgecore is set to provide state-of-the-art networking solutions that are efficient, scalable, and tailored to the ever evolving needs of AI and ML technologies.

SONiC Software: Catalyzing Open Networking in AI

In the realm of AI and modern computing, the synergy between hardware and software dictates the efficiency and scalability of network infrastructures. SONiC, standing at the forefront of open networking software, plays a pivotal role in this ecosystem, especially when paired with advanced hardware like the Edgecore 800G Tomahawk 5. Here’s how SONiC is transforming the networking landscape:

- Open Source Flexibility and Innovation: SONiC is an open-source platform that brings the benefits of community-driven innovation to networking. Its open nature encourages continuous improvement and adaptation, allowing it to evolve rapidly in response to the ever-changing demands of AI and ML workloads. This flexibility makes it an ideal match for the versatile and powerful Edgecore 800G Tomahawk5 and coming DNX platforms.

- Scalability and Customization: One of SONiC’s key strengths is its scalability, which is crucial for managing the extensive network topologies required in AI applications. It supports a wide range of network devices and configurations, making it highly adaptable to various networking needs. This scalability is perfectly complemented by the hardware capabilities of the Edgecore 800G Tomahawk 5, together offering a robust solution for growing AI networks.

- Advanced Network Features and Efficiency: SONiC brings a suite of advanced networking features like enhanced telemetry, sophisticated congestion management, and cognitive routing. These features, when combined with the high-performance specs of the Edgecore 800G, result in an intelligent and efficient networking system. The software’s ability to provide detailed network insights and dynamic routing decisions significantly enhances the overall performance of AI networking infrastructures.

- Streamlined Management and Automation: In large-scale AI deployments, the complexity of network management can be daunting. SONiC simplifies this aspect with its automated network management capabilities. Its integration with the Edgecore 800G hardware allows for easier deployment, monitoring, and maintenance of AI network infrastructures, reducing the operational burden and allowing more focus on innovation and development.

- Community and Ecosystem Support: The SONiC community, backed by major industry players, ensures that the platform is not only technically robust but also has a roadmap aligned with the future of networking technologies. This community support, combined with the hardware advancements from Edgecore, ensures a comprehensive and future-proof networking solution.

The Perfect Match in Open Networking

The combination of SONiC software and Edgecore 800G Tomahawk 5 hardware epitomizes the essence of open networking. It’s a partnership that leverages the best of both worlds – the innovation and flexibility of open-source software with the raw power and efficiency of cutting-edge hardware. For AI and ML applications, this means networks that are not only faster and more reliable but also scalable and adaptable to the fast-paced evolution of technology.

SONiC’s integration with Edgecore’s advanced networking hardware is a beacon of progress in the open networking world. It symbolizes a commitment to openness, innovation, and performance, aligning perfectly with the needs of modern AI and ML infrastructures. This synergy is not just about meeting current demands but is a forward-looking approach, paving the way for the networking technologies of tomorrow.

Ethernet vs. InfiniBand: Unveiling the 10% Performance Advantage

The debate between Ethernet and InfiniBand in the context of AI networking has been the subject of extensive research and experimentation. A pivotal study conducted by Broadcom, led by Mohan Kalkunte, sheds new light on this discussion, revealing a critical advantage of Ethernet in certain AI applications.

- Broadcom’s Groundbreaking Research: The research conducted by Broadcom’s team provided a comprehensive analysis of the two technologies. It wasn’t just a comparison of theoretical capabilities but a deep dive into real-world performance metrics. The findings, especially significant in the realm of AI and ML, indicated that in certain scenarios, Ethernet demonstrated a remarkable 10% improvement over InfiniBand.

- Implications of the 10% Performance Boost: This 10% edge is more than just a number. In the high-stakes domain of AI and ML, where data throughput and latency are crucial, a 10% increase in performance can translate into substantial gains. Considering the astronomical costs associated with GPUs and other AI infrastructure components, this boost in networking efficiency could effectively offset significant portions of network-related expenses.

- Cost Savings and Operational Efficiency: The enhanced performance of Ethernet, as highlighted by Broadcom’s research, means that for the same output, fewer resources are required. This efficiency can lead to cost savings that are so substantial that they can essentially make the networking aspect more cost-effective, almost akin to being ‘free’ when compared to the overall investment in AI infrastructure.

- Strategic Implications for AI Deployments: For organizations deploying AI at scale, this finding is strategically significant. It suggests that by opting for Ethernet over InfiniBand, they can achieve better performance without proportionally increasing their investment in networking infrastructure. This aspect becomes even more crucial when considering large-scale deployments where the cost dynamics play a pivotal role.

- Future-Proofing AI Networks: The continuous evolution of Ethernet, especially as demonstrated by such research, positions it as a future-proof technology for AI networking. With its scalability, cost-effectiveness, and now proven performance advantage, Ethernet is well-suited to adapt to the growing and evolving demands of AI and ML applications.

For a more in-depth understanding, you can watch the full analysis here: Broadcom Study Video.

Ethernet’s Strategic Advantage in AI Networking

The detailed research led by Mohan Kalkunte and Broadcom’s team is a significant contribution to the field of AI networking. It not only challenges the conventional preference for InfiniBand but also establishes Ethernet as a superior choice in certain AI applications. The 10% performance improvement is not just a technical victory; it’s a strategic advantage that can redefine the cost-efficiency and scalability of AI infrastructures. For organizations navigating the complex waters of AI deployment, this finding offers a clear direction towards Ethernet for a more efficient, cost-effective, and future-ready networking solution.

The Ultra Ethernet Consortium: Pioneering the Future of Networking

The Ultra Ethernet Consortium (UEC) represents a pivotal initiative in the evolution of networking technologies, particularly in the realms of AI and high-performance computing (HPC). This consortium, with significant contributions from industry leaders like Edgecore and Broadcom, is driving innovation to meet the burgeoning demands of modern computing infrastructures.

- Mission and Vision of UEC: The primary mission of the UEC is to optimize Ethernet to exceed the performance traditionally expected from specialized networking technologies. This involves developing a complete architecture that caters to the high-performance demands of AI and HPC networking, focusing on functionality, performance, total cost of ownership (TCO), and user-friendliness, all while maintaining Ethernet’s core attribute of interoperability.

- Edgecore and Broadcom’s Active Role: Both Edgecore and Broadcom play instrumental roles in UEC’s mission. Their expertise in developing cutting-edge networking hardware and chip technology, respectively, is crucial in shaping the Consortium’s direction. Their involvement ensures that the UEC’s goals are grounded in practical, scalable, and innovative solutions that can be rapidly deployed in real-world settings.

- Addressing Modern Networking Challenges: The UEC is dedicated to bridging the gaps present in current AI and HPC workloads, which demand higher scale, bandwidth density, multi-pathing capabilities, and fast congestion reaction. The specifications and standards being developed under the UEC are designed to effectively address these requirements, offering increased scale and enhanced functionality that are vital for modern networking infrastructures.

- Advancing Beyond Current Limitations: The UEC’s initiatives are not just about keeping pace with current demands but are also focused on advancing beyond the limitations of existing protocols. By leveraging the collective experience and expertise of its members, the UEC is positioned to offer comprehensive solutions that existing Ethernet or other networking technologies do not currently provide.

- Global Collaboration and Future Outlook: Organized under The Linux Foundation and recognized internationally, the UEC fosters global participation and has a diverse international membership. This collaborative approach ensures that the innovations and standards developed by the UEC will have a broad and lasting impact on the Ethernet networking landscape. The Consortium’s roadmap includes the development and release of technical specifications that are expected to set new benchmarks in Ethernet-based networking.

UEC’s Role in Redefining High-Performance Networking

The Ultra Ethernet Consortium, with its focus on developing an optimized, scalable, and cost-effective Ethernet architecture, is set to transform the networking landscape. It represents not just a technological advancement but also highlights the importance of collaborative efforts in driving innovation in networking. With the active participation of industry giants like Edgecore and Broadcom, the UEC is well-positioned to revolutionize Ethernet networking, making it more adaptable, efficient, and prepared to meet the challenges of modern and future computing demands.

Embracing the Future of AI Networking with Open Solutions

As we approach the end of our journey into the exciting world of AI networking, it’s clear that the landscape is undergoing a transformative shift, driven by open networking principles and innovative technologies.

- The Vital Role of Networking: Our journey began with an acknowledgment of the critical role networking plays in AI and ML applications. We’ve seen how it serves as the unsung hero, enabling the rapid data transfer capabilities that are crucial for leveraging the full potential of advanced computing technologies.

- Ethernet’s Strategic Edge Over InfiniBand: Perhaps one of the most groundbreaking revelations discussed is the 10% performance advantage that Ethernet, particularly solutions like the Edgecore 800G Tomahawk5, holds over InfiniBand. This edge is not merely a technical victory but a strategic one, offering significant cost savings and operational efficiencies in AI infrastructures.

- The Rise of SONiC in Open Networking: The integration of SONiC (Software for Open Networking in the Cloud) with cutting-edge hardware like the Edgecore 800G Tomahawk 5 is a testament to the power of open-source innovation in networking. SONiC’s adaptability, scalability, and advanced features enrich the AI networking landscape, aligning perfectly with the demands of modern data centers.

- The Collective Strength of The Ultra Ethernet Consortium: The collaborative efforts of the Ultra Ethernet Consortium, with pivotal contributions from Edgecore and Broadcom, highlight the industry’s commitment to advancing Ethernet technology. This collaboration is setting new standards in networking, ensuring that Ethernet continues to evolve and meet the ever-growing demands of AI and HPC applications.

Engage with Us for Your Networking Solutions

As we conclude our exploration into the dynamic world of AI networking, the future appears both promising and transformative, driven by the principles of open networking and the innovations of technologies like those from Edgecore and Broadcom.

During my attendance at the last OCP Global Summit, I was intrigued by the presentations showcasing massive CPU Clusters, networks with 2k+ switches, multi-thousands of GPUs, and extensive optical networking. It was a glimpse into the sheer scale of AI infrastructure evolving globally. Initially, I pondered whether such colossal systems were a distant reality for Europe, given their magnitude and complexity.

However, the trend seems to be steering towards a different kind of evolution in the European context – the rise of smaller, more private-like AI clusters for enterprise applications and more. These setups, though not as vast as those presented at the Summit, are no less significant. They represent a shift towards accessible, customizable, and efficient AI networking solutions that can cater to a diverse range of enterprise needs.

As we embrace this future, STORDIS is committed to being at the forefront of this evolution, helping you navigate and aggregate these diverse networking landscapes. Whether you are looking to build large-scale AI infrastructures or smaller, enterprise-level clusters, our mission remains to guide and support you through these exciting developments in AI networking.

Together, let’s venture into this new era of AI networking, harnessing the power of open solutions and cutting-edge technologies to drive success in AI and ML initiatives, regardless of their scale.